Introduction

In this post, I talked about the home k8s cluster I built in 2022, specifically its dual-stack network setup with Cilium.

It had two main purposes:

- first, to practice k8s on a daily basis, because besides being a bit overhyped, I really enjoy using k8s and I want to maintain/develop my skills on it.

- and then, because after many years, I decided to cancel my dedicated server subscription and move back my services to be self-hosted at home.

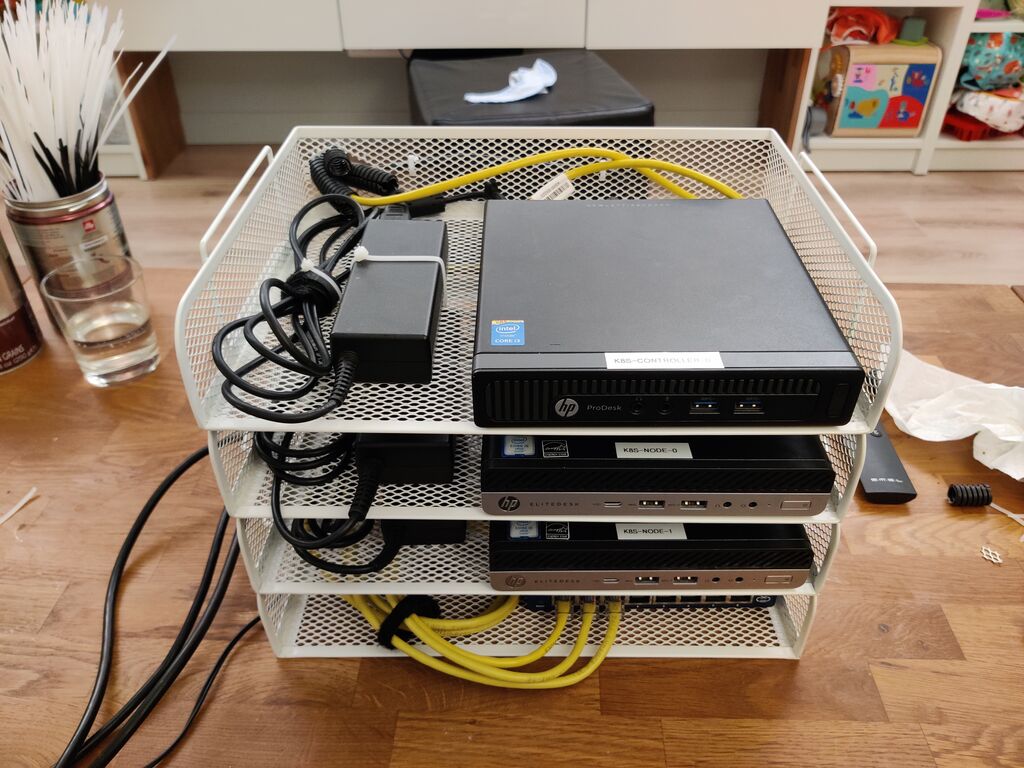

This cluster was built around 3 little machines:

k8s-controller-0: an HP Prodesk 400 G1 Mini featuring a dual-core (4 threads) Core i3 4th gen and 16GB of RAMk8s-node-0: an HP Elitedesk 800 G3 Mini with a quad-core (4 threads) Core i5 6th gen, and 24GB of RAMk8s-node-1: another HP Elitedesk 800 G3 Mini with the same specs.

So, a compact and powerful setup.

I’m fond of these HP Minis, they can be bought used for real cheap, they’re powerful and quite serviceable. (all costs considered, they’re cheaper than Raspeberry Pis, more powerful, do not consume a lot more power, have proper storage interfaces (SATA and nvme, not some trashy microSD), and…are available.)

The base software stack was the following:

- Vanilla Kubernetes deployed by

kubeadm: 1 controller, 2 worker nodes. - CoreDNS (provided by

kubeadm) - Cilium as CNI

- MetalLB to provide

LoadBalancerobjects and expose services on my network - ingress-nginx as HTTP

ingressprovider - cert-manager with ACME webhook for Gandi for TLS certificate automation

- Longhorn to provide replicated and snapshotted

PersistentVolumeobjects across the cluster, with no single point of failure and without relaying on an external NAS.

I was really satisfied with this setup. It was obviously overkill and had a few drawbacks (the main one was, while having 3 nodes, it only had one controller and therefore wasn’t highly-available), but was more than fine for my needs.

After 6 months, one day, k8s-node-1 suddenly died. I was not able to ressucitate it, the motherboard was clearly shot. Bad luck, that’s life.

I had a great deal at the time on these 2 HP Elitedesk 800 G3, and I knew I wouldn’t find another one that cheap. I wasn’t happy to spend around 150€ to replace this node, with no real need for it, the cluster being overkill as I said. So for a few weeks, the cluster ran in “degraded” state, with only one worker node.

My decision was to stop the experimentation, trash this cluster, and rebuild a smaller one capitalizing on what I learned.

The new cluster

I still want K8s, but without any need for high-availability and wanting more simplicity, I knew a single-node cluster was the way to go.

With all services on one machine, no need for Longhorn, PersistentVolumes can live directly on the node’s local storage, simplifying the setup and maintenance.

This is totally doable with kubeadm but it seemed a bit too heavy and uselessly cluttered with features I won’t use.

That’s why I decided to use K3s.

The main selling points for me were the use of a SQLite database to store the cluster state in place of an etcd cluster, and the simplicity of upgrades.

But apart of that main change, my needs stay the same, so all the system services I used with K8s will also be deployed on this cluster.

In particular, I found Flannel (the default CNI deployed by K3s) to lack some IPv6 features I need, so once again I will be using Cilium, which I was really satisfied with.

Deployment

To begin, I took the remaining HP Elitedesk 800 G3 (previously k8s-node-0), and installed a fresh Debian 11 on it.

K3s installation

I used this K3s config.yaml file:

---

node-ip: 192.168.16.24,2001:db8:7653:216::1624

kube-controller-manager-arg: node-cidr-mask-size-ipv6=112

cluster-cidr: 10.96.0.0/16,2001:db8:7653:2aa:cafe:0::/96

service-cidr: 10.97.0.0/16,2001:db8:7653:2aa:cafe:1::/112

flannel-backend: none

disable:

- traefik

- servicelb

disable-network-policy: true

disable-kube-proxy: true

default-local-storage-path: /srv/k3s-local-storage

Some explanations:

node_ipconfigures the local IPs of the server (they must match with the IPs configured on its network interface)kube-controller-manager-arg: here I override the default IPv6 subnet size assigned to each node to give addresses to its pods.cluster-cidr: the internal main subnet for pods, each node subnet will be cut from this one. (/24 for IPv4, /112 for IPv6)service-cidr: the internal main subnet for services- then I disabled:

- the Flannel CNI and its network-policy management

- Traefik (I’m keeping ingress-nginx for now)

- ServiceLB, the K3s integrated

LoadBalancerprovider (I will use MetalLB) kube-proxy: no need for it, Cilium will provide all the needed connectivity.

- finally I customized the path where

PersistentVolumeswill be locally provisioned

Then:

> mkdir /etc/rancher/k3s

> cp k3s_config.yaml /etc/rancher/k3s/config.yaml

> curl -sfL https://get.k3s.io | sh -

Cilium deployment

I used Helm, with the following values:

---

k8sServiceHost: 192.168.16.21

k8sServicePort: 6443

ipv4:

enabled: true

ipv6:

enabled: true

ipam:

mode: cluster-pool

operator:

clusterPoolIPv4PodCIDRList: "10.96.0.0/16"

clusterPoolIPv6PodCIDRList: "2001:db8:7653:2aa:cafe:0::/96"

clusterPoolIPv4MaskSize: 24

clusterPoolIPv6MaskSize: 112

bpf:

masquerade: true

enableIPv6Masquerade: false

kubeProxyReplacement: strict

extraConfig:

enable-ipv6-ndp: "true"

ipv6-mcast-device: "eno1"

ipv6-service-range: "2001:db8:7653:2aa:cafe:1::/112"

operator:

replicas: 1

This config matches what I used previously with K8s, for more details please refer to my previous post.

MetalLB

I also used Helm here, then providing the following manifests to configure it in L2 mode for my network:

---

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: prod-pool

namespace: kube-system

spec:

addresses:

- 192.168.16.120-192.168.16.129

- 2001:db8:7653:216:eeee:fac:ade::/112

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: prod

namespace: kube-system

ingress-nginx

Since I want to expose HTTP services separately between public and private ones, I deployed 2 ingress controllers, both with Helm, using the following values:

---

controller:

ingressClassByName: true

ingressClassResource:

controllerValue: k8s.io/ingress-nginx-public

enabled: true

name: public

service:

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

---

controller:

ingressClassByName: true

ingressClassResource:

controllerValue: k8s.io/ingress-nginx-private

enabled: true

name: private

service:

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

The public ingress-controller has its IP and port opened/forwarded on my home public IP, the other one is only reachable from my private network and VPN.

Then in my ingress resources, I just have to add one line to use the correct controller and expose my service publicly or privately:

---

apiVersion: networking.k8s.io/v1

kind: Ingress

[...]

spec:

ingressClassName: private

[...]

cert-manager

Once again, I deployed it with the official Helm template, then configured everything to validate my domain using Gandi’s API.

> helm install cert-manager jetstack/cert-manager --namespace cert-manager --create-namespace --version v1.10.1 --set installCRDs=true

> helm install cert-manager-webhook-gandi cert-manager-webhook-gandi --repo https://bwolf.github.io/cert-manager-webhook-gandi --version v0.2.0 --namespace cert-manager --set features.apiPriorityAndFairness=true --set logLevel=2

Provided my Gandi API key as a Secret:

---

apiVersion: v1

data:

api-token: ************

kind: Secret

metadata:

name: gandi-credentials

namespace: cert-manager

type: Opaque

And finally added a ClusterIssuer (more convenient than a namespaced Issuer since I’m the sole user of the cluster):

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt

spec:

acme:

email: mysuperpersonalemail@gmail.com

privateKeySecretRef:

name: letsencrypt

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- dns01:

webhook:

config:

apiKeySecretRef:

key: api-token

name: gandi-credentials

groupName: acme.bwolf.me

solverName: gandi

Conclusion

After doing all of that, I eventually replicated the same base setup I had with my previous cluster, this time on a single node. I finally had everything in place to move my stuff on this cluster/server.

After a few weeks, I’m really happy with it: I encountered absolutely no issues, operating it is virtually the same thing as with a “real” K8s cluster, but in a smaller and leaner package.

The local-path-provisioner that comes preinstalled is a really neat feature, to provide standard PersistentVolume objects while still using local storage.

For advanced usages, the ability to disable any unwanted component is especially nice and makes k3s suitable for more needs that one may think at first.

Of course I won’t be pushing it for large production clusters, but for small and medium setups, it’s just great.